Imagine watching a video published on a Ukrainian news portal where Ukraine’s President Volodymyr Zelenskyy is seen asking his soldiers to lay down their weapons in the middle of the war.

The impact of this appeal on the ongoing Ukrainian resistance could be enormous.

Now, follow that up with another video of Russian President Vladimir Putin gladly declaring victory over Ukraine.

Welcome to the dark world of deepfakes where forged multimedia is being used to win an actual war. While the deepfake video of Zelenskyy was seen first on Wednesday, Putin’s fictitious video has also been seen before.

However, this deepfake was seen funneled on social media platforms in tandem with Zelenskyy’s fabricated video on Wednesday.

The officials at social media company Meta, which runs platforms like Facebook and Instagram, said that the platform had identified and removed such deepfake videos on Thursday.

“Earlier today, our teams identified and removed a deepfake video claiming to show President Zelenskyy issuing a statement he never did. It appeared on a reportedly compromised website and then started showing across the internet. We’ve quickly reviewed and removed this video for violating our policy against misleading manipulated media, and notified our peers at other platforms,” Nathaniel Gleicher, head of security policy at Meta, wrote in a post.

The Zelenskyy deepfake appeared first on a Ukrainian news portal Segodnya, which was later reported by a local news channel’s ticker. The news portal and TV network later said they were hacked. The president had to issue an actual video statement to reject the purported claims. “If I can offer someone to lay down their arms, it’s the Russian military,” the Ukrainian leader clarified later.

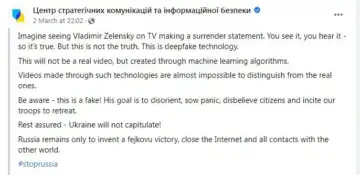

Incidentally, the authorities in Ukraine had cautioned the general public against the possible use of such deepfakes by Russia earlier this month. “Imagine seeing Vladimir Zelensky on TV making a surrender statement. You see it, you hear it – so it’s true. But this is not the truth. This is deepfake technology,” a government statement dated March 2 read.

While several experts questioned the poor quality of the deepfake, commenters in Russia “hypothesised that Zelenskyy uploaded the video in desperation and then backtracked after reconsidering”, US-based think tank Atlantic Council noted.

While several experts questioned the poor quality of the deepfake, commenters in Russia “hypothesised that Zelenskyy uploaded the video in desperation and then backtracked after reconsidering”, US-based think tank Atlantic Council noted.

Both the ticker and the Zelenskyy deepfake video were also amplified on Russian social media platforms.

According to the MIT Media Lab, a research laboratory at the Massachusetts Institute of Technology, US, there are several “DeepFake artifacts” that one can watch out for in order to identify a suspected deepfake video. Anomalies in facial transformations, attention to the cheeks, forehead and eyes and eyebrows could be often helpful in identifying such videos.

“Do shadows appear in places that you would expect? DeepFakes often fail to fully represent the natural physics of a scene. Pay attention to the glasses. Is there any glare? Is there too much glare? Does the angle of the glare change when the person moves? Once again, DeepFakes often fail to fully represent the natural physics of lighting,” MIT Media Lab’s resource on detecting deepfake notes.

An online research project named “Detect Fakes” by the lab explores how human beings and machine learning tools can identify such manipulated media generated with help of Artificial Intelligence.

Evidence suggests that the technology behind widely used deepfakes is slowly improving. Until a few years back, it had been a widely accepted theory in the research community that human characters in deepfakes did not blink normally. However, more recent deepfake videos showed rational blinking as a sign of improved technology.

Driving Naari Programme launched in Chandigarh

Driving Naari Programme launched in Chandigarh